Cara Menginstall dan Konfigurasi Apache Hadoop on single node di Centos 7.

Tutorial Linux Indonesia -- Apache Hadoop merupakan salah satu tools open source yang digunakan untuk mendistribusikan data big storage dan memproses data di seluruh computer cluster.

Proyek ini di dasarkan pada komponen-komponen sebagai berikut;

Hadoop Common yang berisi pustaka dan utilitas java yang di butuhkan oleh modul hadoop lainya.

HDFS ( Hadoop Distribute File System ) Sistem File scalable berbaisis java yang di distribusikan di beberapa node.

Map Reduce kerangka YARN, untuk melakuka pemrosesan data besar parallel.

Hadoop Yarn merupakan kerangka manajemen sumber daya cluster.

Dalam tutorial yang berfokus pada pemula ini, kita akan menginstall Hadoop dalam mode Pseudo-Distribude Mode di CentOS7

Install Java di Centos 7

Pertama yang kamu lakukan adalah instalasi java yang terbaru. Karena Apache Hadoop membutuhkan java untuk mendukung utulitas mereka.

Sekarang anda dapat install java menggunakan command di bawah ini.

[root@master ~]# yum install java-1.8.0-openjdk-devel –y

Setelah melakukan instalasi java, untuk memastikan java sudah berhasil terinstal. Silahkan melakukan check versi dari java tersebut. Menggunakan command di bawah ini.

[root@master ~]# javac -version

javac 1.8.0_201

Kita harus tau juga, tempat folder java.

[root@master ~]# readlink -f /bin/javac

/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.201.b09-2.el7_6.x86_64/bin/javac

Instalasi Apache Hadoop Single Node

Selanjutnya, buat akun pengguna baru di system anda tanpa kekuatan dari root. Yang akan kami gunakan untuk jalur pemasangan hadoop dan alur kerja dari hadoop.

Direcotry home yang digunakan oleh account baru adalah /opt/hadoop

[root@master ~]# useradd -d /opt/hadoop hadoop

[root@master ~]# passwd hadoop

Sekarang anda dapat melakukan download Apache Hadoop mengunakan command di bawah ini.

[root@master ~]# curl -O http://apache.javapipe.com/hadoop/common/hadoop-2.9.1/hadoop-2.9.1.tar.gz

Lakukan extract file hadoop yang sudah anda download sebelumnya. Jangan lupa untuk berikan akses user hadoop terhadap folder /opt/hadoop/ .

[root@master ~]# tar xfz hadoop-2.9.1.tar.gz

[root@master ~]# cp -rf hadoop-2.9.1/* /opt/hadoop/

[root@master ~]# chown -R hadoop:hadoop /opt/hadoop/

Pastikan semua file di folder /opt/hadoop sudah di berikan akses terhadap user hadoop.

[root@master ~]# ls -al /opt/hadoop/

total 152

drwx------. 12 hadoop hadoop 284 Oct 29 02:27 .

drwxr-xr-x. 4 root root 34 Oct 29 01:49 ..

-rw-------. 1 hadoop hadoop 1077 Oct 29 02:28 .bash_history

-rw-r--r--. 1 hadoop hadoop 18 Apr 10 2018 .bash_logout

-rw-r--r--. 1 hadoop hadoop 703 Oct 29 01:36 .bash_profile

-rw-r--r--. 1 hadoop hadoop 231 Apr 10 2018 .bashrc

drwxr-xr-x. 2 hadoop hadoop 194 Oct 29 02:04 bin

drwxr-xr-x. 3 hadoop hadoop 20 Oct 29 01:17 etc

drwxr-xr-x. 2 hadoop hadoop 106 Oct 29 01:17 include

drwxr-xr-x. 3 hadoop hadoop 20 Oct 29 01:17 lib

drwxr-xr-x. 2 hadoop hadoop 239 Oct 29 01:17 libexec

-rw-r--r--. 1 hadoop hadoop 106210 Oct 29 01:17 LICENSE.txt

drwxrwxr-x. 3 hadoop hadoop 4096 Oct 29 02:17 logs

drwxrwxr-x. 2 hadoop hadoop 25 Oct 29 02:27 my_storage

-rw-r--r--. 1 hadoop hadoop 15915 Oct 29 01:17 NOTICE.txt

-rw-r--r--. 1 hadoop hadoop 1366 Oct 29 01:17 README.txt

drwxr-xr-x. 3 hadoop hadoop 4096 Oct 29 01:17 sbin

drwxr-xr-x. 4 hadoop hadoop 31 Oct 29 01:17 share

drwx------. 2 hadoop hadoop 80 Oct 29 01:43 .ssh

-rw-------. 1 hadoop hadoop 835 Oct 29 02:07 .viminfo

[root@master ~]#

Selanjutnya, login dengan pengguna hadoop yang sudah anda buat sebelumnya.

[root@master ~]# su - hadoop

Last login: Mon Oct 29 02:20:51 EDT 2018 on tty1

Konfigurasi Hadoop dan Java Environment Variables pada sistem anda dengan mengedit .bash_profile

[hadoop@master ~]$ nano .bash_profile

Tambahkan baris berikut ini di akhir file;

export JAVA_HOME=/usr/java/default

export PATH=$PATH:$JAVA_HOME/bin

export CLASSPATH=.:$JAVA_HOME/jre/lib:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

export HADOOP_HOME=/opt/hadoop

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

Pastikan variable java dan variable hadoop anda sudah benar. Menggunakan command di bawah ini.

[hadoop@master ~]$ source .bash_profile

[hadoop@master ~]$ echo $HADOOP_HOME

/opt/hadoop

[hadoop@master ~]$ echo $JAVA_HOME

/usr/java/default

[hadoop@master ~]$

Terakhir, konfigurasikan authentikasi berbasis kunci ssh untuk akun hadoop dengan menjalankan perintah di bawah ini.

[hadoop@master ~]$ssh-keygen -t rsa

[hadoop@master ~]$ssh-copy-id master.bagol69.com

Sekarang saat untuk melakukan men-setup cluster hadoop pada satu node dengan mode pseudo distributed dengan mengedit file konfigurasinya.

Lokasi file konfigurasi hadoop adalah $HADOOP_HOME /etc/hadoop/ yang di wakili dalam tutorial ini /opt/hadoop ( akun rumah pengguna hadoop ).

Setelah anda masuk ke pengguna hadoop, anda dapat mulai melakukan edit file tersebut.

Yang pertama yang anda edit adalah core-site.xml. File ini berisi informasi tentang nomor port yang digunakan oleh hadoop, sistem file yang mengolakasikan memory, batas memory penyimpanan data dan ukuran Read /Write ( buffer ).

Anda tambahkan property berikut di antara

[hadoop@master ~]$ nano /opt/hadoop/etc/hadoop/core-site.xml

[hadoop@master ~]$ mkdir –p /app/hadoop/tmp

[hadoop@master ~]$ chown –R hadoop:hadoop /app/hadoop/tmp

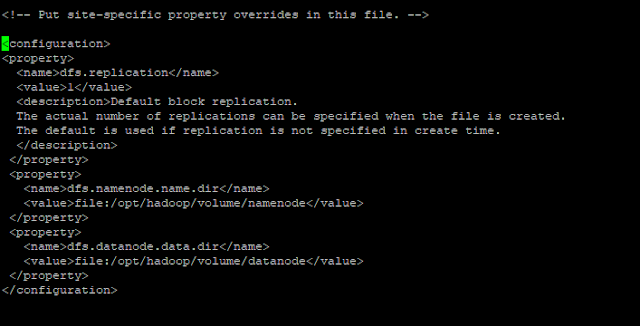

Selanjutnya, buka dan edit file hdf-site.xml file ini berisi informasi tentang nilai data replikasi, jalur namenode dan jalur data node untuk sistem local.

Pada panduan ini, saya akan menggunakan /opt/volume directory untuk menyimpan sistem file hadoop saya.

Karena saya telah menentukan /opt/volume sebagai penyimpanan sistem berkas hadoop saya.

Saya perlu membuat directory ( datanode dan namenode ) dari akun root dan memberikan semua izin akses ke pengguna hadoop.

Dengan menjalankan perintah di bawah ini.

Dengan menjalankan perintah di bawah ini.

[root@master ~]# mkdir -p /opt/volume/namenode/

[root@master ~]# mkdir -p /opt/volume/datanode/

[root@master ~]# chown -R hadoop:hadoop /opt/volume/

Sekarang veritifikasi apakah direktory namenode dan datanode mempunyai user hadoop. dapat menggunakan perintah di bawah ini.

[root@master ~]# ls -al /opt/

total 0

drwxr-xr-x. 4 root root 34 Oct 29 01:49 .

dr-xr-xr-x. 17 root root 224 Oct 28 23:59 ..

drwx------. 12 hadoop hadoop 284 Oct 29 03:36 hadoop

drwxr-xr-x. 4 hadoop hadoop 38 Oct 29 01:49 volume

[root@master ~]#

[root@master ~]# exit

logout

[hadoop@master ~]$

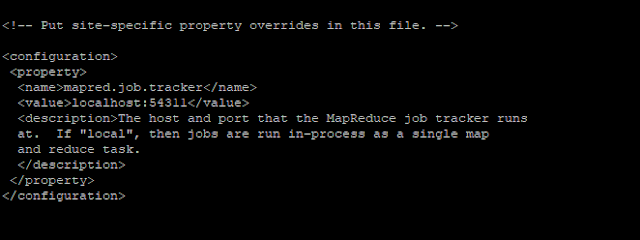

Sekarang edit file mapred-site.xml file ini untuk menentukan bahwa saya menggunakan kerangka MapReduce Frame Work.

[hadoop@master ~]$ nano etc/hadoop/mapred-site.xml

Terakhir, setting Java Home untuk hadoop environment di file hadoop-env.sh

[hadoop@master ~]$ nano /opt/hadoop/etc/hadoop/hadoop-env.sh

Sebelum

export JAVA_HOME=/usr/java/default

Sesudah

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.201.b09-2.el7_6.x86_64

Setelah itu, ganti nilai localhost dari file slaves untuk menunjuk ke hostname pada mesin linux anda.

[hadoop@master ~]$ nano etc/hadoop/slaves

master.bagol69.com

Setelah hadoop single node sudah di konfigurasi. Saat untuk menginisialisasi sistem file HDFS dengan menformat directory pennyimpanan /opt/volume/namenode dengan menggunakan perintah ini.

[hadoop@master ~]$ hdfs namenode -format

18/10/29 03:51:15 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = master.bagol69.com/10.10.10.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.9.1

STARTUP_MSG: classpath = /opt/hadoop/etc/hadoop:/opt/hadoop/share/hadoop/commo n/lib/commons-collections-3.2.2.jar:/opt/hadoop/share/hadoop/common/lib/jetty-6. 1.26.jar:/opt/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/opt/hadoop/s hare/hadoop/common/lib/jetty-sslengine-6.1.26.jar:/opt/hadoop/share/hadoop/commo n/lib/jsp-api-2.1.jar:/opt/hadoop/share/hadoop/common/lib/jersey-core-1.9.jar:/o pt/hadoop/share/hadoop/common/lib/jersey-json-1.9.jar:/opt/hadoop/share/hadoop/c ommon/lib/jettison-1.1.jar:/opt/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1 .jar:/opt/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/opt/hadoop/share/ha doop/common/lib/stax-api-1.0-2.jar:/opt/hadoop/share/hadoop/common/lib/activatio n-1.1.jar:/opt/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/opt/h adoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/opt/hadoop/share/ha doop/common/lib/jackson-jaxrs-1.9.13.jar:/opt/hadoop/share/hadoop/common/lib/jac kson-xc-1.9.13.jar:/opt/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/op t/hadoop/share/hadoop/common/lib/asm-3.2.jar:/opt/hadoop/share/hadoop/common/lib /log4j-1.2.17.jar:/opt/hadoop/share/hadoop/common/lib/jets3t-0.9.0.jar:/opt/hado op/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/opt/hadoop/share/hadoop/comm on/lib/commons-lang-2.6.jar:/opt/hadoop/share/hadoop/common/lib/commons-configur ation-1.6.jar:/opt/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/opt/ hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/opt/hadoop/share/had oop/common/lib/commons-beanutils-core-1.8.0.jar:/opt/hadoop/share/hadoop/common/ lib/commons-lang3-3.4.jar:/opt/hadoop/share/hadoop/common/lib/slf4j-api-1.7.25.j ar:/opt/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/opt/hadoop/shar e/hadoop/common/lib/avro-1.7.7.jar:/opt/hadoop/share/hadoop/common/lib/paranamer -2.3.jar:/opt/hadoop/share/hadoop/common/lib/snappy-java-1.0.5.jar:/opt/hadoop/s hare/hadoop/common/lib/commons-compress-1.4.1.jar:/opt/hadoop/share/hadoop/commo n/lib/xz-1.0.jar:/opt/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/op t/hadoop/share/hadoop/common/lib/gson-2.2.4.jar:/opt/hadoop/share/hadoop/common/ lib/hadoop-auth-2.9.1.jar:/opt/hadoop/share/hadoop/common/lib/nimbus-jose-jwt-4. 41.1.jar:/opt/hadoop/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/opt/had oop/share/hadoop/common/lib/json-smart-1.3.1.jar:/opt/hadoop/share/hadoop/common /lib/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/hadoop/share/hadoop/common/lib/a pacheds-i18n-2.0.0-M15.jar:/opt/hadoop/share/hadoop/common/lib/api-asn1-api-1.0. 0-M20.jar:/opt/hadoop/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/opt/hadoop /share/hadoop/common/lib/zookeeper-3.4.6.jar:/opt/hadoop/share/hadoop/common/lib /netty-3.6.2.Final.jar:/opt/hadoop/share/hadoop/common/lib/curator-framework-2.7 .1.jar:/opt/hadoop/share/hadoop/common/lib/curator-client-2.7.1.jar:/opt/hadoop/ share/hadoop/common/lib/jsch-0.1.54.jar:/opt/hadoop/share/hadoop/common/lib/cura tor-recipes-2.7.1.jar:/opt/hadoop/share/hadoop/common/lib/htrace-core4-4.1.0-inc ubating.jar:/opt/hadoop/share/hadoop/common/lib/stax2-api-3.1.4.jar:/opt/hadoop/ share/hadoop/common/lib/woodstox-core-5.0.3.jar:/opt/hadoop/share/hadoop/common/ lib/junit-4.11.jar:/opt/hadoop/share/hadoop/common/lib/hamcrest-core-1.3.jar:/op t/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/opt/hadoop/share/hadoop/ common/lib/hadoop-annotations-2.9.1.jar:/opt/hadoop/share/hadoop/common/lib/guav a-11.0.2.jar:/opt/hadoop/share/hadoop/common/lib/jsr305-3.0.0.jar:/opt/hadoop/sh are/hadoop/common/lib/commons-cli-1.2.jar:/opt/hadoop/share/hadoop/common/lib/co mmons-math3-3.1.1.jar:/opt/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar:/opt/h adoop/share/hadoop/common/lib/httpclient-4.5.2.jar:/opt/hadoop/share/hadoop/comm on/lib/httpcore-4.4.4.jar:/opt/hadoop/share/hadoop/common/lib/commons-logging-1. 1.3.jar:/opt/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/opt/hadoop/sh are/hadoop/common/lib/commons-io-2.4.jar:/opt/hadoop/share/hadoop/common/lib/com mons-net-3.1.jar:/opt/hadoop/share/hadoop/common/lib/servlet-api-2.5.jar:/opt/ha doop/share/hadoop/common/hadoop-common-2.9.1.jar:/opt/hadoop/share/hadoop/common /hadoop-common-2.9.1-tests.jar:/opt/hadoop/share/hadoop/common/hadoop-nfs-2.9.1. jar:/opt/hadoop/share/hadoop/hdfs:/opt/hadoop/share/hadoop/hdfs/lib/commons-code c-1.4.jar:/opt/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/opt/hadoop/share/h adoop/hdfs/lib/commons-logging-1.1.3.jar:/opt/hadoop/share/hadoop/hdfs/lib/netty -3.6.2.Final.jar:/opt/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/opt/hadoop/ share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/opt/hadoop/share/hadoop/hdfs/lib/commons -cli-1.2.jar:/opt/hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/opt/hadoop/share /hadoop/hdfs/lib/commons-io-2.4.jar:/opt/hadoop/share/hadoop/hdfs/lib/servlet-ap i-2.5.jar:/opt/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/opt/hadoop/share/h adoop/hdfs/lib/jetty-util-6.1.26.jar:/opt/hadoop/share/hadoop/hdfs/lib/jersey-co re-1.9.jar:/opt/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/opt/ha doop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/opt/hadoop/share/hadoo p/hdfs/lib/jersey-server-1.9.jar:/opt/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/ opt/hadoop/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/opt/hadoop/share/hadoop/h dfs/lib/protobuf-java-2.5.0.jar:/opt/hadoop/share/hadoop/hdfs/lib/htrace-core4-4 .1.0-incubating.jar:/opt/hadoop/share/hadoop/hdfs/lib/hadoop-hdfs-client-2.9.1.j ar:/opt/hadoop/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/opt/hadoop/share/hadoop/h dfs/lib/okio-1.6.0.jar:/opt/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.j ar:/opt/hadoop/share/hadoop/hdfs/lib/netty-all-4.0.23.Final.jar:/opt/hadoop/shar e/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/opt/hadoop/share/hadoop/hdfs/lib/xml-api s-1.3.04.jar:/opt/hadoop/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/opt/hadoo p/share/hadoop/hdfs/lib/jackson-databind-2.7.8.jar:/opt/hadoop/share/hadoop/hdfs /lib/jackson-annotations-2.7.8.jar:/opt/hadoop/share/hadoop/hdfs/lib/jackson-cor e-2.7.8.jar:/opt/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.9.1.jar:/opt/hadoop/shar e/hadoop/hdfs/hadoop-hdfs-2.9.1-tests.jar:/opt/hadoop/share/hadoop/hdfs/hadoop-h dfs-nfs-2.9.1.jar:/opt/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-2.9.1.jar:/op t/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-2.9.1-tests.jar:/opt/hadoop/share/ hadoop/hdfs/hadoop-hdfs-native-client-2.9.1.jar:/opt/hadoop/share/hadoop/hdfs/ha doop-hdfs-native-client-2.9.1-tests.jar:/opt/hadoop/share/hadoop/hdfs/hadoop-hdf s-rbf-2.9.1.jar:/opt/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-2.9.1-tests.jar:/o pt/hadoop/share/hadoop/yarn:/opt/hadoop/share/hadoop/yarn/lib/commons-cli-1.2.ja r:/opt/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/opt/hadoop/share/hadoop/ya rn/lib/commons-compress-1.4.1.jar:/opt/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/ opt/hadoop/share/hadoop/yarn/lib/servlet-api-2.5.jar:/opt/hadoop/share/hadoop/ya rn/lib/commons-codec-1.4.jar:/opt/hadoop/share/hadoop/yarn/lib/jetty-util-6.1.26 .jar:/opt/hadoop/share/hadoop/yarn/lib/jersey-core-1.9.jar:/opt/hadoop/share/had oop/yarn/lib/jersey-client-1.9.jar:/opt/hadoop/share/hadoop/yarn/lib/jackson-cor e-asl-1.9.13.jar:/opt/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar :/opt/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/opt/hadoop/share/ha doop/yarn/lib/jackson-xc-1.9.13.jar:/opt/hadoop/share/hadoop/yarn/lib/guice-serv let-3.0.jar:/opt/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/opt/hadoop/share/ha doop/yarn/lib/javax.inject-1.jar:/opt/hadoop/share/hadoop/yarn/lib/aopalliance-1 .0.jar:/opt/hadoop/share/hadoop/yarn/lib/commons-io-2.4.jar:/opt/hadoop/share/ha doop/yarn/lib/jersey-server-1.9.jar:/opt/hadoop/share/hadoop/yarn/lib/asm-3.2.ja r:/opt/hadoop/share/hadoop/yarn/lib/jersey-json-1.9.jar:/opt/hadoop/share/hadoop /yarn/lib/jettison-1.1.jar:/opt/hadoop/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.j ar:/opt/hadoop/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/opt/hadoop/share/hado op/yarn/lib/commons-math3-3.1.1.jar:/opt/hadoop/share/hadoop/yarn/lib/xmlenc-0.5 2.jar:/opt/hadoop/share/hadoop/yarn/lib/httpclient-4.5.2.jar:/opt/hadoop/share/h adoop/yarn/lib/httpcore-4.4.4.jar:/opt/hadoop/share/hadoop/yarn/lib/commons-net- 3.1.jar:/opt/hadoop/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/opt/had oop/share/hadoop/yarn/lib/jetty-6.1.26.jar:/opt/hadoop/share/hadoop/yarn/lib/jet ty-sslengine-6.1.26.jar:/opt/hadoop/share/hadoop/yarn/lib/jsp-api-2.1.jar:/opt/h adoop/share/hadoop/yarn/lib/jets3t-0.9.0.jar:/opt/hadoop/share/hadoop/yarn/lib/j ava-xmlbuilder-0.4.jar:/opt/hadoop/share/hadoop/yarn/lib/commons-configuration-1 .6.jar:/opt/hadoop/share/hadoop/yarn/lib/commons-digester-1.8.jar:/opt/hadoop/sh are/hadoop/yarn/lib/commons-beanutils-1.7.0.jar:/opt/hadoop/share/hadoop/yarn/li b/commons-beanutils-core-1.8.0.jar:/opt/hadoop/share/hadoop/yarn/lib/commons-lan g3-3.4.jar:/opt/hadoop/share/hadoop/yarn/lib/avro-1.7.7.jar:/opt/hadoop/share/ha doop/yarn/lib/paranamer-2.3.jar:/opt/hadoop/share/hadoop/yarn/lib/snappy-java-1. 0.5.jar:/opt/hadoop/share/hadoop/yarn/lib/gson-2.2.4.jar:/opt/hadoop/share/hadoo p/yarn/lib/nimbus-jose-jwt-4.41.1.jar:/opt/hadoop/share/hadoop/yarn/lib/jcip-ann otations-1.0-1.jar:/opt/hadoop/share/hadoop/yarn/lib/json-smart-1.3.1.jar:/opt/h adoop/share/hadoop/yarn/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/hadoop/sh are/hadoop/yarn/lib/apacheds-i18n-2.0.0-M15.jar:/opt/hadoop/share/hadoop/yarn/li b/api-asn1-api-1.0.0-M20.jar:/opt/hadoop/share/hadoop/yarn/lib/api-util-1.0.0-M2 0.jar:/opt/hadoop/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/opt/hadoop/share/ha doop/yarn/lib/netty-3.6.2.Final.jar:/opt/hadoop/share/hadoop/yarn/lib/curator-fr amework-2.7.1.jar:/opt/hadoop/share/hadoop/yarn/lib/curator-client-2.7.1.jar:/op t/hadoop/share/hadoop/yarn/lib/jsch-0.1.54.jar:/opt/hadoop/share/hadoop/yarn/lib /curator-recipes-2.7.1.jar:/opt/hadoop/share/hadoop/yarn/lib/htrace-core4-4.1.0- incubating.jar:/opt/hadoop/share/hadoop/yarn/lib/stax2-api-3.1.4.jar:/opt/hadoop /share/hadoop/yarn/lib/woodstox-core-5.0.3.jar:/opt/hadoop/share/hadoop/yarn/lib /leveldbjni-all-1.8.jar:/opt/hadoop/share/hadoop/yarn/lib/geronimo-jcache_1.0_sp ec-1.0-alpha-1.jar:/opt/hadoop/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/opt/hado op/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/opt/hadoop/share/hadoop/yarn /lib/mssql-jdbc-6.2.1.jre7.jar:/opt/hadoop/share/hadoop/yarn/lib/metrics-core-3. 0.1.jar:/opt/hadoop/share/hadoop/yarn/lib/fst-2.50.jar:/opt/hadoop/share/hadoop/ yarn/lib/java-util-1.9.0.jar:/opt/hadoop/share/hadoop/yarn/lib/json-io-2.5.1.jar :/opt/hadoop/share/hadoop/yarn/lib/commons-lang-2.6.jar:/opt/hadoop/share/hadoop /yarn/lib/guava-11.0.2.jar:/opt/hadoop/share/hadoop/yarn/lib/jsr305-3.0.0.jar:/o pt/hadoop/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/opt/hadoop/share/hado op/yarn/lib/jaxb-api-2.2.2.jar:/opt/hadoop/share/hadoop/yarn/lib/stax-api-1.0-2. jar:/opt/hadoop/share/hadoop/yarn/lib/activation-1.1.jar:/opt/hadoop/share/hadoo p/yarn/lib/protobuf-java-2.5.0.jar:/opt/hadoop/share/hadoop/yarn/hadoop-yarn-api -2.9.1.jar:/opt/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.9.1.jar:/opt/hadoo p/share/hadoop/yarn/hadoop-yarn-registry-2.9.1.jar:/opt/hadoop/share/hadoop/yarn /hadoop-yarn-server-common-2.9.1.jar:/opt/hadoop/share/hadoop/yarn/hadoop-yarn-s erver-nodemanager-2.9.1.jar:/opt/hadoop/share/hadoop/yarn/hadoop-yarn-server-web -proxy-2.9.1.jar:/opt/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhis toryservice-2.9.1.jar:/opt/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcem anager-2.9.1.jar:/opt/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.9.1.ja r:/opt/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.9.1.jar:/opt/hadoop/share/h adoop/yarn/hadoop-yarn-server-sharedcachemanager-2.9.1.jar:/opt/hadoop/share/had oop/yarn/hadoop-yarn-server-timeline-pluginstorage-2.9.1.jar:/opt/hadoop/share/h adoop/yarn/hadoop-yarn-server-router-2.9.1.jar:/opt/hadoop/share/hadoop/yarn/had oop-yarn-applications-distributedshell-2.9.1.jar:/opt/hadoop/share/hadoop/yarn/h adoop-yarn-applications-unmanaged-am-launcher-2.9.1.jar:/opt/hadoop/share/hadoop /mapreduce/lib/protobuf-java-2.5.0.jar:/opt/hadoop/share/hadoop/mapreduce/lib/av ro-1.7.7.jar:/opt/hadoop/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar: /opt/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/opt/hadoop /share/hadoop/mapreduce/lib/paranamer-2.3.jar:/opt/hadoop/share/hadoop/mapreduce /lib/snappy-java-1.0.5.jar:/opt/hadoop/share/hadoop/mapreduce/lib/commons-compre ss-1.4.1.jar:/opt/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/opt/hadoop/share /hadoop/mapreduce/lib/hadoop-annotations-2.9.1.jar:/opt/hadoop/share/hadoop/mapr educe/lib/commons-io-2.4.jar:/opt/hadoop/share/hadoop/mapreduce/lib/jersey-core- 1.9.jar:/opt/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/opt/hadoop /share/hadoop/mapreduce/lib/asm-3.2.jar:/opt/hadoop/share/hadoop/mapreduce/lib/l og4j-1.2.17.jar:/opt/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/op t/hadoop/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/opt/hadoop/share/had oop/mapreduce/lib/guice-3.0.jar:/opt/hadoop/share/hadoop/mapreduce/lib/javax.inj ect-1.jar:/opt/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/opt/hadoop /share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/opt/hadoop/share/hadoop/mapred uce/lib/guice-servlet-3.0.jar:/opt/hadoop/share/hadoop/mapreduce/lib/junit-4.11. jar:/opt/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/opt/hadoop/sha re/hadoop/mapreduce/hadoop-mapreduce-client-core-2.9.1.jar:/opt/hadoop/share/had oop/mapreduce/hadoop-mapreduce-client-common-2.9.1.jar:/opt/hadoop/share/hadoop/ mapreduce/hadoop-mapreduce-client-shuffle-2.9.1.jar:/opt/hadoop/share/hadoop/map reduce/hadoop-mapreduce-client-app-2.9.1.jar:/opt/hadoop/share/hadoop/mapreduce/ hadoop-mapreduce-client-hs-2.9.1.jar:/opt/hadoop/share/hadoop/mapreduce/hadoop-m apreduce-client-jobclient-2.9.1.jar:/opt/hadoop/share/hadoop/mapreduce/hadoop-ma preduce-client-hs-plugins-2.9.1.jar:/opt/hadoop/share/hadoop/mapreduce/hadoop-ma preduce-examples-2.9.1.jar:/opt/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-c lient-jobclient-2.9.1-tests.jar:/opt/hadoop/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://github.com/apache/hadoop.git -r e30710aea4e6e55e6 9372929106cf119af06fd0e; compiled by 'root' on 2018-04-16T09:33Z

STARTUP_MSG: java = 1.8.0_191

************************************************************/

18/10/29 03:51:16 INFO namenode.NameNode: registered UNIX signal handlers for [T ERM, HUP, INT]

18/10/29 03:51:16 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-594323a6-b47f-4121-9f5d-9cf4498d043e

18/10/29 03:51:23 INFO namenode.FSEditLog: Edit logging is async:true

18/10/29 03:51:24 INFO namenode.FSNamesystem: KeyProvider: null

18/10/29 03:51:24 INFO namenode.FSNamesystem: fsLock is fair: true

18/10/29 03:51:24 INFO namenode.FSNamesystem: Detailed lock hold time metrics en abled: false

18/10/29 03:51:41 INFO namenode.FSImageFormatProtobuf: Saving image file /opt/vo lume/namenode/current/fsimage.ckpt_0000000000000000000 using no compression

18/10/29 03:51:42 INFO namenode.FSImageFormatProtobuf: Image file /opt/volume/na menode/current/fsimage.ckpt_0000000000000000000 of size 323 bytes saved in 0 sec onds .

18/10/29 03:51:42 INFO namenode.NNStorageRetentionManager: Going to retain 1 ima ges with txid >= 0

18/10/29 03:51:42 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master.bagol69.com/10.10.10.1

************************************************************/

[hadoop@master ~]$

Anda dapat menjalankan services Hadoop Cluster. Menggunakan command di bawah ini.

[hadoop@master ~]$ start-dfs.sh

Starting namenodes on [master.bagol69.com]

master.bagol69.com: namenode running as process 2532. Stop it first.

master.bagol69.com: datanode running as process 1820. Stop it first.

Starting secondary namenodes [0.0.0.0]

0.0.0.0: secondarynamenode running as process 2799. Stop it first.

[hadoop@master ~]$

[hadoop@master ~]$ start-yarn.sh

starting yarn daemons

resourcemanager running as process 2961. Stop it first.

master.bagol69.com: nodemanager running as process 3071. Stop it first.

[hadoop@master ~]$

Anda dapat melihat daftar semua socket terbuka untuk Apache Hadoop di sistem anda menggunakan command di bawah ini.

[hadoop@master ~]$ ss -tuln

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port

udp UNCONN 0 0 127.0.0.1:323 *:*

udp UNCONN 0 0 ::1:323 :::*

tcp LISTEN 0 100 127.0.0.1:25 *:*

tcp LISTEN 0 128 *:50010 *:*

tcp LISTEN 0 128 *:50075 *:*

tcp LISTEN 0 128 *:50020 *:*

tcp LISTEN 0 128 10.10.10.1:9000 *:*

tcp LISTEN 0 128 *:50090 *:*

tcp LISTEN 0 128 127.0.0.1:45739 *:*

tcp LISTEN 0 128 *:50070 *:*

tcp LISTEN 0 128 *:22 *:*

tcp LISTEN 0 128 :::8088 :::*

tcp LISTEN 0 100 ::1:25 :::*

tcp LISTEN 0 128 :::13562 :::*

tcp LISTEN 0 128 :::8030 :::*

tcp LISTEN 0 128 :::8031 :::*

tcp LISTEN 0 128 :::8032 :::*

tcp LISTEN 0 128 :::8033 :::*

tcp LISTEN 0 128 :::8040 :::*

tcp LISTEN 0 128 :::8042 :::*

tcp LISTEN 0 128 :::40205 :::*

tcp LISTEN 0 128 :::22 :::*

[hadoop@master ~]$

Pengetesan Apache Hadoop

Untuk memastikan Apache Hadoop sudah berjalan dengan normal. Anda dapat melalkukan dengan cara buat directory untuk data storage client anda. Menggunakan command di bawah ini.

[hadoop@master ~]$ hdfs dfs -mkdir /bagol69_com

Selanjutnya, anda dapat melakukan copy file dari mesin linux anda ke storage HDFS. Menggunakan command di bawah ini.

[hadoop@master ~]$ ls

bin include libexec logs NOTICE.txt sbin

etc lib LICENSE.txt my_storage README.txt share

[hadoop@master ~]$ hdfs dfs -put README.txt /bagol69.com

Anda dapat melihat file yang sudah anda copy ke storage HDFS. Menggunakan command di bawah ini.

[hadoop@master ~]$ hdfs dfs -cat /bagol69_com/README.txt

For the latest information about Hadoop, please visit our website at:

http://hadoop.apache.org/core/

and our wiki, at:

http://wiki.apache.org/hadoop/

....

[hadoop@master ~]$ hdfs dfs -ls /bagol69_com/README.txt

-rw-r--r-- 3 hadoop supergroup 1366 2018-10-29 03:57 /bagol69_com/README.txt

[hadoop@master ~]$

Untuk mengambil data dari HDFS ke mesin linux anda, dapat menggunakan command di bawah ini.

[hadoop@master ~]$ hdfs dfs -get /bagol69_com/ ./

[hadoop@master ~]$ ls

bagol69_com etc lib LICENSE.txt my_storage README.txt share

bin include libexec logs NOTICE.txt sbin

[hadoop@master ~]$

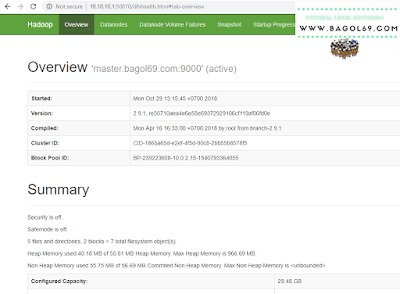

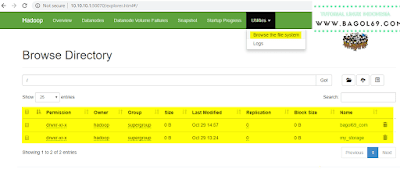

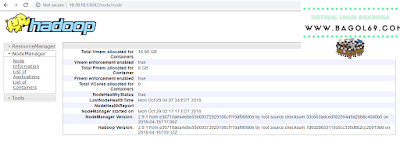

Layanan Hadoop WEB GUI

Untuk mengakses layanan Hadoop dari Browser anda, kunjungi link ini

atau

Untuk melihat directory yang sudah di buat sebelumnya. ( Browse Directory ).

Untuk melihat Informasi Cluster dan Apps ( Resource Manager )

Untuk melihat Informasi NodeManager

Yang perlu Anda lakukan adalah menyalakan aplikasi yang kompatibel dengan Hadoop dan Anda siap untuk menggunakan Hadoop !!!!!

Untuk melihat Informasi tambahan, silahkan lihat halaman Apache Hadoop Dokumentasi dan Hadoop Wiki.